Complete IOT Whitepaper, covers past, present and potential future of the sensor industry.

![]() Download IOT Sensor Whitepaper by Ned Hayes – From Embedded to Pervasive (2020)

Download IOT Sensor Whitepaper by Ned Hayes – From Embedded to Pervasive (2020)

Sensors: A Brief History

A sensor is a physical or electronic device that can quantify a physical change and converts it into a ‘signal’ which can be read by an observer or by another instrument. Many different types of sensors are now deployed across a variety of devices, and with the explosion of Internet of Things (IOT) devices, the sensor market will continue to accelerate.

In the 1990s and early 2000s, the use of sensors was largely constrained to embedded systems, such as industrial factories that used weight, temperature and motion sensors to understand physical change of machines and systems. Most of the analysis of the data that came from these embedded systems was processed in on-premise data centers or remote data centers (later styled “cloud” data centers). Most of these types of on-device sensors and accompanying software were used merely for industrial applications such as device profiling and power management, and did not directly affect or influence consumer behavior on the device.

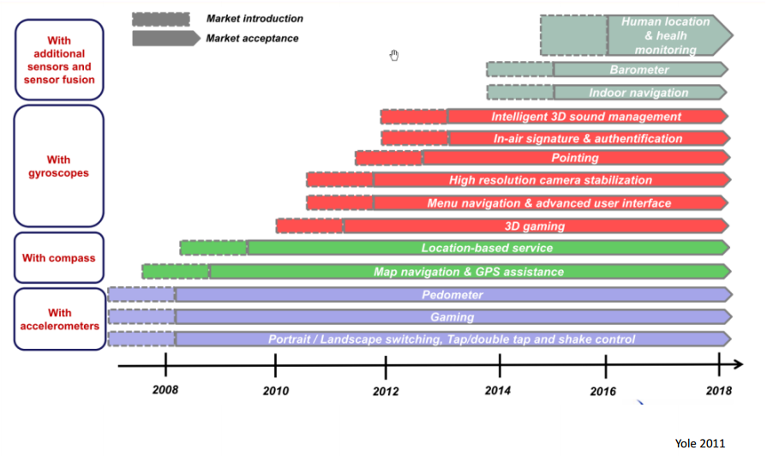

In the late 2000s, with the advent of the iPhone and Android phones, sensors began to be included on smaller consumer-ready devices. The first iPhone came pre-loaded with three sensors: an accelerometer, a proximity sensor and an ambient light sensor.

This was seen as a revolutionary breakthrough.[1] These smaller devices came with on-device computing stacks that could process the resulting sensor data immediately and provide immediate changes to the user experience based on sensor readings and contextual awareness. These experiential changes led to the market rapidly adopting a number of new sensor types over the next ten years.

Sensor fusion on device led to the availability of abstract “user states” and the ability to target these states with specific changes in the device performance and feedback to the user. Rapidly, many manufacturers added additional sensors and the sensor world exploded into new form factors, new types of sensors and new ways to fuse this information together. For example, new accelerometers could capture complex gestures and could use user-defined motion to provide commands to the device.

The immediate feedback provided by these on-device systems capable of processing sensor inputs and providing a change in the experience to the user led to immediate innovations in application development and the advent of “context aware computing,” declared to be an important trend by Intel’s then-CTO Justin Rattner in 2010.[2] Mobile devices and mobile applications began to make use not only of “hard sensing” – direct input from accelerometers, cameras and other sensors – but also “soft sensing”, which included user-input data such as social media, online history and personal calendars.[3]

Cloud-based data services further complicated the picture of a “user state” on a device: if a user was in a particular location and took a particular action captured by a sensor on device, that “state” composed of those two inputs would mean something specific to the device and could surface certain immediate changes for the user’s application experience, based on that context.

These additional layers of “context awareness” led to the rapid growth of sensor-derived applications that were responsive to user soft sensing behavior as well as hard sensor behavior.

Sensors today

Sensors today have become indispensable to consumers and enterprises. For business interests, sensors provide vital information about parameters for industrial applications such as temperature, position, chemistry, pressure, force and load, and flow and level. This information is used to influence processes and change mechanisms based on the immediate data gathered by sensors that weigh, measure and quantify manufacturing events in real time.

Technical advances have also created a number of software layers that simplify the gathering of information for consumer usages. Sensors have become smaller and less power-hungry, while also adding more accuracy and more “understanding” of their environment. Microelectromechanical systems (MEMs) like those found in current mobile devices have revolutionized our experience of the world.

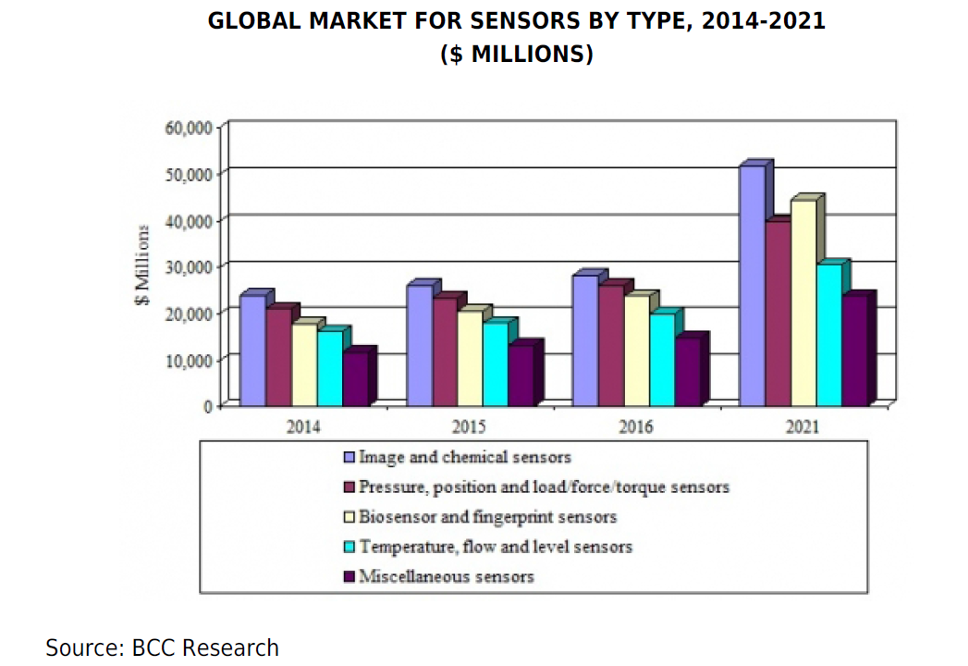

Today, the sensor industry is robust and growing.[4] The global market for sensors, valued at $91.2 billion in 2014, is expected to increase to $101.9 billion in 2015 and to over $190.6 billion by 2021, at a compound annual growth rate (CAGR) of 11.0% over the five-year period from 2016 through 2021. Here’s a summary of the global market for sensors. [5]

The explosion in both sensor usages and on-device computing stacks to analyze and produce results from that sensed data has led to additional new form factors that are sensor aware. Instead of merely a device that a user can hold in their hand, sensors are now being delivered as core functional components on everything from drones to autonomous vehicles to eso-skeletons to robots.

The explosion in both sensor usages and on-device computing stacks to analyze and produce results from that sensed data has led to additional new form factors that are sensor aware. Instead of merely a device that a user can hold in their hand, sensors are now being delivered as core functional components on everything from drones to autonomous vehicles to eso-skeletons to robots.

The Emerging Sensor Ecosystem

Sensors began as heavy pieces of machinery that documented physical changes to metal tabs, shifts in width or pressure or chemical changes to certain solutions in order to report on atmospheric conditions and human body conditions. A good example of a historical “sensor” is a blood pressure cuff: the cuff tightens and provides a reading of the pulse and internal pressure inside that tightened area. These were analog sensors.

Over the past ten years, sensors that document only individual readings from one type of data have shrunk in size and have increasingly moved from physical changes on analog pieces of machinery into highly miniaturized components that are built with specialized software on specialized pieces of circuity. Instead of requiring constant power, these miniaturized sensors have also become extremely low-powered. Some of these sensor classes require only to be charged via motion (ie in a watch or on a wrist) or via RF (radio frequency) or even via ambient light.

Moving from analog to digital sensors has accelerated the use of single-purpose small sensors without built-in logic on a board attached to the sensor. Sensors can be used identify the temperature, or air pressure changes or can detect gas in the environment. Some slightly more intelligent sensors can gather data on human proximity. But typically, many of the emerging new class of sensors are severely underpowered and constrained in their ability to gather any information. They gather a single data point and that is all: Temp = 99° / Air pressure = 200 cfm / Movement = Yes.

Examples of this trend toward miniaturized single-purpose sensors include sensors that are embedded in conference rooms detect human presence[6]; sensors in “smart shirts” to detect human motion and sleep;[7] and single-purpose factory sensors for industrial usages.[8] A multiplicity of single-use sensor types can be envisioned and are increasingly emerging from sensor ODMs and ISVs around the world.

The majority of software being used in this world of single-purpose sensors is either a lower-level specialized system such as Ardunio, with a limited instruction set or a variant of Linux, such as Yocto. Variants of Linux proliferate here, and Android (as a Linux variant) has some usage here as well.

Pervasive Model

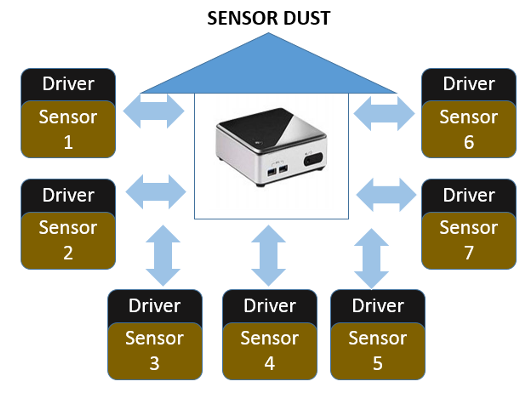

The future model of how sensors will be used is derived from the embedded world of sensors with industrial use cases. In this model, sensors are treated as individual small compartmentalized components that exist as single-purpose “data gathering” modules.

Eventually, sensors will be dispersed like dust everywhere, gathering all the data they can find in real time.

Information from individual sensors is synced (via radio frequency or other spectrum) into a central hub or a system with larger storage capacity and a larger processor that can make sense of that data and can create insights based on the inputs from these various sensors. This model came out of the embedded system world, and in this model sensors are becoming more pervasive and smaller and smaller in form factor.

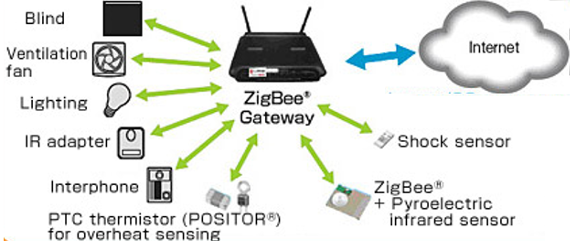

Here’s one example of a pervasive sensor implementation in a house or business facility.

In this system, individual small sensors with limited capabilities provide single points of data on individual items such as whether the blinds in the house are up or down (Blind), whether or not the fan is running (Ventilation Fan), the On/Off status of the light (Lighting), the connectivity to local systems via IR (IR adapter), the phone status (Interphone), and a temperature reading (PTC thermistor overheat sensor), the motion and passive detection of the presence of a person in the room (IR Pyroelectric), and a shock sensor for intrusion detection (shock). All of this sensor information is fed via local WiFi or other methods to a local ZigBee gateway device. In this instance, the processing of this data can be done to some degree on the local ZigBee gateway device, but more processing – with aggregation from multiple buildings – can continue in the Internet cloud.

Many hardware-focused companies (such as Intel and Microsoft) are working hard to penetrate this market, but these companies are today facing an uphill battle because of the vast panoply of existing embedded system vendors, and the fractured nature of this pervasive sensor market.

Architectural Stack

The architectural stack in the new world of distributed sensors typically looks something like the diagram below (the architecture is generalized away from specific hardware or software implementations, to represent activity at each level in the stack).

Applications are developed that show off experiences like showing your fitness activity that use middleware (usually Linux today) that stores data in a DB and uses machine learning, predictive algorithms and data modeling.

There is very little compute on the device. And making sense of all the data from these pervasive sensors is a hard problem to solve for vendors who have historically had many layers of compute to manage I/O.

The Problem with Proliferation

As the world of sensors has grown astronomically over the last twenty years, there has been an accompanying proliferation of new sensor vendors, new sensor solutions and new ways of fusing sensor data together into unique use cases that surface the value of that sensor data. Because no single company or industry consortium has led the way with sensor standardization or with a unified solution for exposing sensor information, there has been growing complexity and fragmentation across the industry.

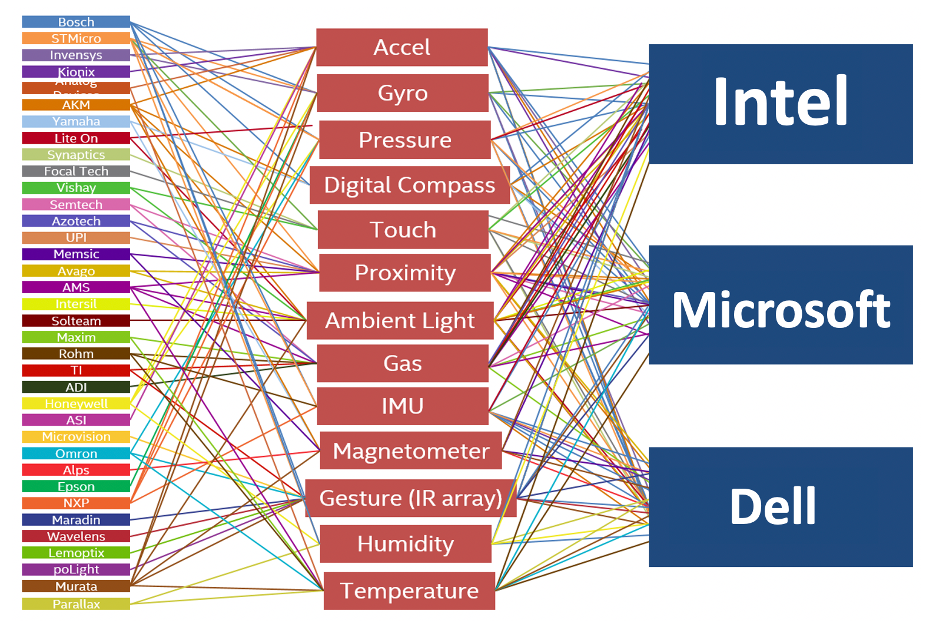

Today, there are dozens of different types of sensors with hundreds of sensor companies and third party vendors providing sensor-related solutions. There are thus thousands of potential supplier/sensor combinations.

Although many companies provide platforms on which sensors are sited and on which sensor data is collected, fused and transmitted, most large classic hardware-tied technology companies have had an internal lack of cohesion around sensor solutions. This has led to fragmentation of offerings between different silicon and hardware technology vendors. Things aren’t compatible or supported. This has led to a severe inability to deliver end-to-end solutions for enterprise customers. Multiple suppliers and customers have now expressed that large tech companies losing opportunities in sensor enablement on core silicon platforms and in our primary markets of personal computing, IOT computing and emerging computing stacks.

Here’s an overview diagram that outlines how this lost value happens for large hardware-focused technology companies and their enterprise customers.

Sensors to Sensor Fusion (multiple sensors) are brought together via algorithmic methods to create Fused State Vectors or Contextual understandings of the sensor data in a more holistic perspective. From there, these higher level understandings of sensor behavior is used to create actual business value that can be surfaced on platforms or systems offered by hardware business units.

New System Attributes

In the near term (within the next five years), there are many larger sensors – such as the ones that are found in IOT applications today – that are modeling the types of architecture and system development that we’ll see in broad deployment in “sensor dust” scenarios. Today, larger packaged sensors that are being delivered for general usage on utility poles, packaged light-bulbs, smart clothing and other standard enterprise and consumer environments are part of this long-term trend.

A good contemporary example of a single-purpose sensor with consumer applications is a low-end Fitbit or Nike device,[10] which only has a motion tracker built into it and does not contain a screen or user interface. This tracking sensor does not have high-end on-device processing capabilities of a Fitbit wristband. That sensor on a single-purpose fitness tracker is charged thru a miniature battery (or some are charged merely by motion itself) and must sync its information into a larger hub (such as a phone or laptop) in order for the information to be useful. This is a common model for this class of devices.

It is important to understand that these types of single-use sensors are moving rapidly towards smaller packages, and they are tracking towards the same sort of architectural constructs and will have the same developer usage models.

In this single-purpose world, sensors share these key attributes:

- Single Purpose – sensors only derive one small piece of data from their environment. A sensor of this class can gather only a single data point about their environment.

- Low Power – sensors are under-powered and may only receive power on a limited basis or can be “woken up” with light or RF to provide data they can report.

- No Processing Power – No sensor fusion happens on a single-purpose miniaturized sensor. Logic and processing boards are typically not attached to these sensors. No machine learning or predictive modeling typically happens on a sensor this small.

- Wireless Network Required – a cloud of “dust” sensors of any size require a wireless network that can pick up data from individual sensors and collate this data in one central “hub” location

Emerging Use Cases

Today, many forecasts about a world filled with single-purpose sensors is focused mostly on industrial usages: analysts describe scenarios in which a single-purpose sensor reports on the incline vector of a machine on a factory floor, or a temperature sensor reports an engine variation. Reference architectures often emphasize API libraries, network connectivity and wide area network connectivity.

However, these industrial use cases are short-sighted and miss a whole class of possible new use cases that are more consumer accessible and consumer adjacent.

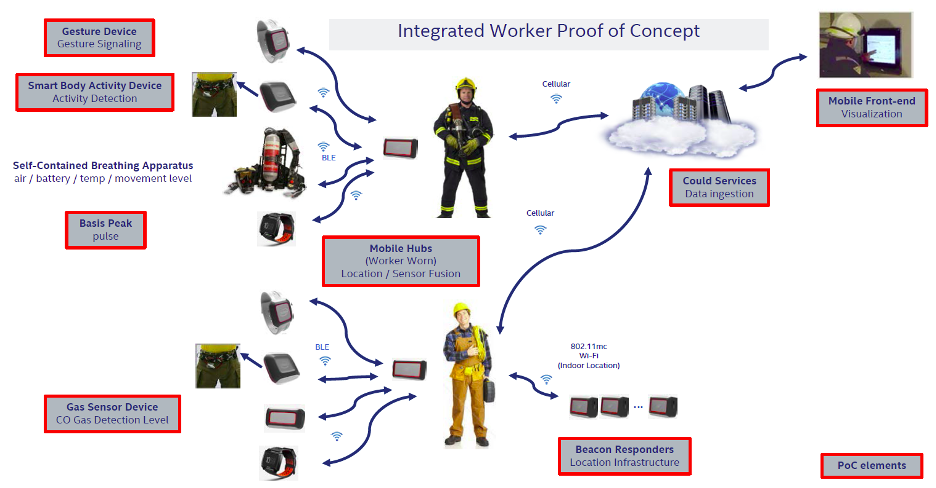

In the real world, here’s what one possible implementation of a consumer adjacent use case using “sensor dust” would look like. In this use case, small sensors that can capture gestures and other single-point inputs deliver data to a mobile hub that syncs with beacon responders and a cloud service for front-end visualization. The small “gas sensor” and “gesture sensor” and “smart body activity” sensors are miniaturized sensors that only deliver small bits of data to a central mobile “hub” device:

Human Centered Sensors

New emerging use cases come even closer to the skin of a human being. Many new sensor algorithms are also being designed to better understand bio-rhythms and information about the human body. These new body-specific sensors report the end-user’s physical state. As we’ve seen this with this new class of sensors, today, a whole new set of additional data is available: velocity, physical activity, barometer, galvanic response, and body temperature. These new sensors can deliver always-on sensor data about the human body and human bio-rhythms to any system authorized by the user to receive the data.

In the post-Covid era, we are beginning to see companies rapidly adapt their sensor stacks and sensor fusion frameworks to the new world of always-on temperature and galvanic responses that can detect a person’s infection prior to the appearance of a full set of symptomatic reactions.[10a]

Shirley Ryan AbilityLab have partnered with Northwestern University in Chicago to develop a novel wearable device (See Figure 12).

Right now, they are creating a set of algorithms specifically designed to detect early COVID-19 symptoms and monitor patient well-being. The wearable “patch” device produces ongoing streams of data and uses machine learning methods to uncover subtle and important health insights. This sensor can continuously measure and interpret respiratory activity in ways that are impossible with traditional intermittent monitoring systems.

However, it is critical to remember that creating new form factors for new classes of sensors are only good if the use case is compelling enough to force consumers to change the habits of a lifetime and actually begin wearing or using those new form factors. Wearables are only a great bio sensing opportunity if people wear them.[9]

A much easier path to broad adoption of new sensor classes and new algorithms is to deliver them on devices that consumers and enterprises already have as part of their daily productive environment.

It is possible – if unlikely – that new classes of devices that look like a FitBit or Google Glass may be part of the common lexicon in the next five years. Many of the physical sensors found in Nike Fuelband will no doubt be tightly integrated with these new wearable and on-skin computers. However, it is also possible that these biosensing algorithms will simply migrate to existing form factors and will become part of the world of PCs and phones.

However, despite the fact that much biosensing data will be collected with existing form factors, from phones, laptops and existing PC form factors, the world of wearable-sourced data and bio-rhythm specific algorithms will continue to expand in certain targeted verticals such as medical and sports.

However, despite the fact that much biosensing data will be collected with existing form factors, from phones, laptops and existing PC form factors, the world of wearable-sourced data and bio-rhythm specific algorithms will continue to expand in certain targeted verticals such as medical and sports.

In these verticals, biosensing data is collected in a Sensor Dust model, where remote data is gathered from small sensors on a human body and is connected into a local network via a hub (such as a phone or computer getting data via WiFi, Zigbee, BLE or other protocols. The MCU processes this data and displays it elsewhere for the user to examine. One example can be seen in the following flow of information in a Medical Monitoring use case:

When sensors for bio metric readings are tightly integrated into existing form factors that consumers are used to using, then this Sensor Dust model of getting biosensing data on a regular basis will become a standard part of computing.

Form factors to source, analyze and display this data will include the existing PC/phone in the consumer’s world. Once again, it is important to note that existing PC form factors will become primary avenues to gather bio-sening information. In the near future, it is more than likely that your PC will be able to tell you after five hours of uninterrupted work that according to data it has sourced in the background during your daily activities, you “seem stressed” and “could use a 20 minute walk to de-stress and be more productive after the walk.”

As sensors continue to miniaturize, it is also likely that most clothing, footware and accessories will begin to contain sensors of different types and classes. Tapping your PC screen for a bio-metric update, and slipping on a shirt or just your everyday pair of shoes (both of which happen to contain bio-sensors) will be a much more natural activity than finding and using a use-case-specific form factor such as a FitBit or Apple Watch.

A Future of Sensor Dust

New types of small sensors and advanced algorithms will rapidly take over consumer use cases as the trend towards miniaturization continues. There will be wallpaper or paint you can put on your wall which will detect if someone is in the room, via miniaturized embedded sensors in the paint. There will be sensors that can be attached to every piece of furniture and even utensils, to indicate motion or usage.

Sensors can easily be attached to every piece of clothing and may be swimming in our bloodstream, reporting on our body’s performance and detected medication levels.

Here’s one current example of a device going to market soon:[11]

Within the next ten years, low-powered single-purpose sensors may be incredibly small and as ever-present as dust. The future is “smart dust,” in which sensors are placed nearly everywhere and can gather single point information in any situation or any location or about any person.

Current work underway in UC Berkeley labs includes ingestible core body temperature sensors,[12] as well as additional sensors that could float in the air and provide ambient readings on any environment.[13]

This would create a future market of “sensor dust” types of ultra-pervasive always-on sensors. Within the next ten years, low-powered single-purpose sensors may be incredibly small and as ever-present as dust. The future is “smart dust,” in which sensors are placed nearly everywhere and can gather single point information in any situation or any location or about any person.

To cite just a single example, a tiny implant the size of a grain of sand has been created that can connect computers to the human body without the need for wires or batteries, opening up a host of futuristic possibilities.[14]

The sensor that has been prototyped is already shrunk to a 1 millimeter cube, about the size of a large grain of sand. Such a tiny form factor for a sensor can float in the air, be embedded in walls or device or can be implanted in the muscles and peripheral nerves. As an implant, this kind of a “dust-sized sensor” can be used to monitor internal nerves, muscles or organs in real time.

The sensor seen here, for example, contains a piezoelectric crystal that converts ultrasound vibrations from outside the body into electricity to power a tiny, on-board transistor that is in contact with a nerve or muscle fiber. Ultrasound vibrations, which can penetrate almost every part of the body, are used to power the sensors, which are about a millimeter across. The devices, dubbed “neural dust”, could be used to continually monitor organs like the heart in real time and, if they can be made even smaller, implanted into the brain.

Different styles of “sensor dust” will become ingestible, “paintable” and incredibly pervasive, leading to an emergent future developments. These things are in the labs today and eventually will find their way to broader market adoption in paint, clothing, in the body and in clouds that drift through urban environments.[15]

The readiness of such form factors is some distance away, and most will not hit the general consumer or enterprise market until 2025. Yet the infrastructure to deliver data from tiny sensors is already being built, and technology companies who are aware of the future must anticipate the emergence of “sensor dust” as a primary growth vector for the industry and for their own nascent endeavors.

Download IOT Sensor Whitepaper by Ned Hayes

Download IOT Sensor Whitepaper by Ned Hayes

– From Embedded to Pervasive (2020)

© 2020-2022 by Ned Hayes | License for Use: Creative Commons License – Attribution, No Derivatives.

NOTES

[1] Apple iPhone 1.0 sensors included only an accelerometer, a proximity sensor and an ambient light sensor: http://www.apple.com/pr/library/2007/01/09Apple-Reinvents-the-Phone-with-iPhone.html

[2] Intel CTO looks to Context Aware Future: http://www.alphr.com/news/361216/intel-cto-looks-ahead-to-context-aware-future

[3] Intel context aware computing and soft sensors: http://www.popsci.com/technology/article/2010-09/intels-context-aware-computing-will-let-your-smartphone-sense-your-mood

[4] February 2016. BCC Research. “Global Markets and Technologies for Sensors” p. 6-8

[5] February 2016. BCC Research. “Global Markets and Technologies for Sensors” p. 6-8

[6] Human Proximity sensor: http://www.eetimes.com/document.asp?doc_id=1317369

[7] Smart shirts: http://www.wareable.com/smart-clothing/best-smart-clothing

[8] Industrial single purpose sensors for detection of wire faults: http://bit.ly/2csRUiy

[9] The actual demographic usage numbers of wearables such as Nike Fuelbands, Adidas miCoach Pacer, FitBit, Wii Fit and Nordic Track’s iFit have been disappointing in the marketplace, despite massive marketing efforts. The install base and the investment in these areas has not resulted in the expected consumer adoption at scale.

[10a] Researchers at Northwestern are working on new wearable sensors specific to the Covid-19 situition: https://news.northwestern.edu/stories/2020/04/monitoring-covid-19-from-hospital-to-home-first-wearable-device-continuously-tracks-key-symptoms/

[10] Nike+ Sensor: http://www.400mtogo.com/2008/02/13/10-different-ways-to-attach-a-nike-sensor-to-your-shoe/

[11] Peiris, V. (2013). “Highly integrated wireless sensing for body area network applications”. SPIE Newsroom. doi:10.1117/2.1201312.005120.

[12] Ingestible sensors by Google X Labs: http://getresultsinaction.com/wearables-are-so-yesterday/

[13] Sensor dust conceived and prototyped: http://www.independent.co.uk/news/science/neural-dust-implant-sensor-brain-nerves-humans-machines-prosthetics-berkeley-a7170251.html

[14] UC Berkeley scientists invent embeddable sensor dust: http://news.berkeley.edu/2016/08/03/sprinkling-of-neural-dust-opens-door-to-electroceuticals/

[15] Robert Sanders. “Sprinkling of neural dust opens door to electroceuticals.” Berkeley News Service. August 3, 2016. Reporting on studies published in the scientific journal Neuron.