The murder of George Floyd happened one month ago. Since then, protesters in the hundreds of thousands have taken to the streets to proclaim that Black Lives Matter (#blacklivesmatter) and to ask for police accountability in the face of systematic racism.

The vast majority of these protesters have been peaceful people exercising their first amendment rights of free speech, from the clergy and artists who met in Lafayette Square in Washington D.C. to the 60,000-strong Silent March in Seattle last week. Some have rioted, yet the FBI has confirmed that the threat of bad actors comes mostly from white supremacist elements fomenting violence.

However, we’ve all watched with horror over the last month as both peaceful protesters and rioters alike have been met by police with stunning acts of over-the-top violence, from the senseless attack in Buffalo on a 75-year-old pacifist to the targeted attacks on journalists and children alike.

This self-destructive and lethal response to a nationwide protest against police violence has caused many technology vendors to question their previously close relationships with law enforcement groups.

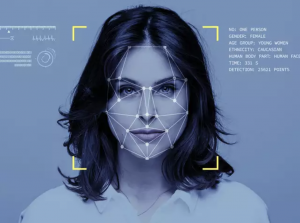

This has also pointedly revived media interest regarding the technology used to perpetuate racial biases in America’s police departments. The technology of most interest in this cultural moment is biometric tech used for identity – specifically facial recognition technology. On multiple occasions, it’s been proven that police disproportionately use surveillance technology, such as AI-based facial recognition or drone surveillance, against Black communities.

Last year, I managed two different companies that were focused on various aspects of biometrics and identity validation. In the course of the year, I met with a variety of companies who were innovating in facial recognition tech, from Robbie.AI to Real Network’s Dan Grimm (General Manager for the SAFR project).

What I saw in my time in the biometrics sphere was that ethical issues around facial recognition had proliferated with every expansion of the technology. Early concerns focused on the surveillance itself: should human beings be watched 24/7? Among the most concerning uses of facial analysis technology involve the bolstering of mass surveillance, the weaponization of AI, and harmful discrimination in law enforcement contexts.

Algorithmic Concerns

The concerns have grown in magnitude as the tech has continued to improve. Machine learning and new predictive techniques, when used to analyze a video stream, can produce findings well beyond facial identity. They can infer emotional state, religious affiliation, class, race, gender and health status. In addition, machine learning methods can determine where someone is going (travel trajectory), where they came from (national origin), how much they make (through clothing analysis), diseases they have (through analysis of the vocal track), and much more. All of these techniques are admittedly imperfect. They don’t always recognize faces accurately: false positives and false negatives happen. Some algorithms get confused if you wear a hat or sunglasses, grow a beard, or cut your hair differently.

Even worse, training data used to develop many early facial recognition algorithms was originally mostly Caucasian, so people of African and Asian descent are not recognized as well. Researchers have found that even today there is a significant disparity in the correct identification of people of color. For example, one study by MIT demonstrated that facial recognition software had an error rate of 0.8% for light-skinned men and 34.7% error rate for dark-skinned women. This is a massive disparity and can produce extremely biased conclusions for law enforcement uses.

There is also a lack of oversight in how law enforcement uses facial recognition technologies. Recently, the ACLU discovered that Geofeedia was using facial recognition tech to assist law enforcement to identify peaceful protestors by analyzing imaging data derived from social media sources such as Facebook, Twitter, and Instagram. Given the noted algorithmic bias against minorities and systemic bias both in the algorithms and in many police departments, we should recognize that unfettered use of this tech by law enforcement is beyond problematic. Now is the time to call out the risks with facial recognition technology and make changes.

There is also a lack of oversight in how law enforcement uses facial recognition technologies. Recently, the ACLU discovered that Geofeedia was using facial recognition tech to assist law enforcement to identify peaceful protestors by analyzing imaging data derived from social media sources such as Facebook, Twitter, and Instagram. Given the noted algorithmic bias against minorities and systemic bias both in the algorithms and in many police departments, we should recognize that unfettered use of this tech by law enforcement is beyond problematic. Now is the time to call out the risks with facial recognition technology and make changes.

Most of the companies who are playing in the enterprise space have recognized the problems, but last year they not yet understand the magnitude of the potential issues with false recognitions or inaccurate conclusions. Therefore, most had not taken forceful steps at the time to address these issues.

Now, in this critical season of protest and lethal police over-reaction, it’s encouraging to see companies respond intelligently and forcefully to our current crisis of conscience. Most companies leading the charge in facial recognition have made public statements about policy changes that can help them be better allies to the black community. In fact, several have announced moratoriums on offering facial recognition products for domestic law enforcement. Here’s a roundup of the most pertinent recent statements:

Facial Recognition Statements

IBM notified Congress on June 8th that they would no longer develop or offer facial recognition technologies. CEO Arvind Krishna stated clearly that IBM “firmly opposes and will not condone uses of any technology, including facial recognition technology offered by other vendors, for mass surveillance, racial profiling, violations of basic human rights and freedoms.” IBM went on to urge Congress to start “a national dialogue” on the use of facial recognition technology by law enforcement.

Microsoft soon followed up with a public statement. President Brad Smith wrote in his letter: “We’ve decided that we will not sell facial recognition to police departments in the United States until we have a national law in place ground in human rights that will govern this technology.” Microsoft is one of the few companies that can legitimately claim consistently over the years in this critical area. Over a year ago, for example, Microsoft already declined to allow California law enforcement to install Microsoft facial recognition technology in cars or body cams.

For a time, Google was touting its software as being a “catalyst for a culture change” at Clarkstown County Police Department, which was sued in 2017 by Black Lives Matter activists who said the department conducted illegal surveillance on them. So now, Google employees are now petitioning their own company to stop selling software and services to police departments. “We’re disappointed to know that Google is still selling to police forces, and advertises its connection with police forces as somehow progressive, and seeks more expansive sales rather than severing ties with police and joining the millions who want to defang and defund these institutions,” reads the petition, which was started by a group of Google employees called Googlers Against Racism last week and is addressed to Sundar Pichai, the CEO of Google’s parent company Alphabet. “Why help the institutions responsible for the knee on George Floyd’s neck to be more effective organizationally?”

Amazon was late to recognize the importance of their business development work with police, but they have now announced a one-year moratorium for “police use” of Rekognition, the company’s nascent facial recognition technology. This is an interesting and important decision for Amazon, as the company’s Ring product has received much interest from law enforcement in recent years.

The Safe Face Pledge

Several c ompanies have now also endorsed the Safe Face Pledge, which pre-existed society’s recognition of the lethal and destructive manner of current police tactics. Drafted by the Algorithmic Justice League and the Center on Technology & Privacy at Georgetown Law, the Safe Face Pledge is an important overview of the pertinent topics in this field. This pledge can guide companies in recognizing and taking action towards a public commitment that can mitigate the abuse of facial analysis technology. The historic pledge prohibits lethal use of the technology, lawless police use, and requires transparency in any government use. The Safe Face Pledge provides actionable measurable steps that a company can take to follow AI ethics principles by making commitments to:

ompanies have now also endorsed the Safe Face Pledge, which pre-existed society’s recognition of the lethal and destructive manner of current police tactics. Drafted by the Algorithmic Justice League and the Center on Technology & Privacy at Georgetown Law, the Safe Face Pledge is an important overview of the pertinent topics in this field. This pledge can guide companies in recognizing and taking action towards a public commitment that can mitigate the abuse of facial analysis technology. The historic pledge prohibits lethal use of the technology, lawless police use, and requires transparency in any government use. The Safe Face Pledge provides actionable measurable steps that a company can take to follow AI ethics principles by making commitments to:

- Show Value for Human Life, Dignity, and Rights

- Address Harmful Bias

- Facilitate Transparency

- Embed Commitments into Business Practices

If you work in facial recognition tech, I’d encourage you to check out the Safe Face Pledge to understand the implications of marrying facial recognition to autonomous systems (even a door lock could be autonomous!) and to prevent risks to your employer or the larger population. Ensure you’ve educated your business decision-makers on the potential problems with the proliferation of this technology, and add ethical safeguards around who your company does business with, and how your technology is used in the future.

The Future of Facial Recognition

One World Identity has a solid summary of the current legislative landscape on facial biometrics used in law enforcement. This portion is excerpted from One World Identity.

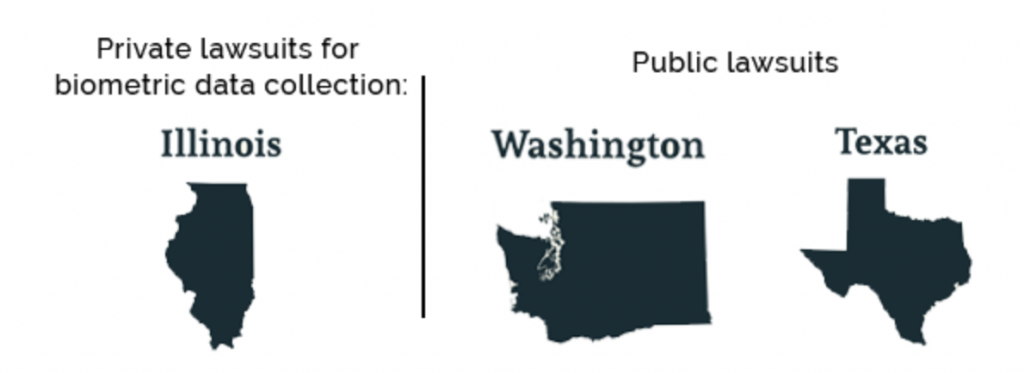

Before we can dive into what the future holds and how facial recognition tech impacts today’s social climate, it’s critical to look at the current landscape. Prevailing legislative trends indicate a lack of federal consensus on facial biometrics in law enforcement. It’s truly a patchwork slew of solutions across the US:

Before we can dive into what the future holds and how facial recognition tech impacts today’s social climate, it’s critical to look at the current landscape. Prevailing legislative trends indicate a lack of federal consensus on facial biometrics in law enforcement. It’s truly a patchwork slew of solutions across the US:

Only three states have banned facial recognition tech in police body cameras:

While it may seem jarring that only three states have banned these practices, it reflects how much more commonplace it is for cities to preside over facial recognition use. City agencies that have banned facial recognition technologies by law enforcement include San Francisco and Oakland, California and Brookline, Cambridge, Northampton, and Somerville, Massachusetts.

One thing to note in this regulatory variance is that other states do allow for alternative forms of recourse, including:

Although some cities and states are actively making a stand one way or another on this technology, the attitude on the horizon seems to largely be focused on oversight, not bans, despite momentum otherwise. 2019 was tellingly both a big year for states and cities to move against facial recognition technology, as well as a big year for momentum on industry lobbying and influence. Last September, we saw 39 industry groups advocate for considering “viable alternatives to [facial recognition technology] bans”. Moreover , there is still little impetus to change on a national level. The Justice in Policing Act was recently proposed, which includes a ban on biometric facial recognition in police body cameras without a warrant. Its scope, however, has been criticized for largely missing the primary use cases for facial recognition. For example, it lacks meaningful application to surveillance cameras, despite its higher frequency in law enforcement use for facial recognition than some of the other cited use cases. Likewise, there has been no momentum for regulating federal agencies. Most notable are the FBI, with its database of 640 million photos, and ICE, which has conducted countless permissionless facial recognition searches against state DMV databases.

Another important thing to note is that pure-play vendors have largely not been part of the tech companies announcing these moratoriums; in fact, the former have continued to court law enforcement agencies. Axon wants to continue producing technology that can “help” reduce systemic bias for law enforcement, but is also one of the largest police technology companies producing body cams. NEC Corp, a Japanese company that serves as a leading producer of law enforcement technology and hardware, is also looking to expand its business into the US, primarily with real-time facial recognition technology. Clearview AI, one of the most controversial facial recognition companies working with law enforcement, has made no statement advocating for a moratorium or ban on their technology.

Another important thing to note is that pure-play vendors have largely not been part of the tech companies announcing these moratoriums; in fact, the former have continued to court law enforcement agencies. Axon wants to continue producing technology that can “help” reduce systemic bias for law enforcement, but is also one of the largest police technology companies producing body cams. NEC Corp, a Japanese company that serves as a leading producer of law enforcement technology and hardware, is also looking to expand its business into the US, primarily with real-time facial recognition technology. Clearview AI, one of the most controversial facial recognition companies working with law enforcement, has made no statement advocating for a moratorium or ban on their technology.

Considering that over half of American adults are enrolled in some form of facial recognition network readily searchable by law enforcement officials, this should give us pause for concern. It should lead us to reconsider current law enforcement use of facial recognition technology.

More from Ned Hayes and from One World Identity.